Category: Uncategorized

Why Are TV Channels Still Numbered, Anyway?

In the on-demand, programmatically-bought future, fitting content into numbered slots will seem antiquated and superfluous. Our great-grandchildren will hardly believed we ever did things this way.

When I was a kid, we had a small black & white TV with a dial. We had our choice of channels 2 -13, plus another knob for the vast UHF wasteland. No offense, Weird Al.

Television was presented to us as an ordered list, because that was how it was broadcast. Since each TV station needed to broadcast on a different frequency, they were assigned orderly little slots. Since geographically adjacent markets needed to alternate which slots they used, and TV-makers didn’t want to manufacture new knobs for each market, the slots were assigned numbers and the numbers were put on the knobs in sequence. Channel 4 broadcast at a slightly higher frequency than Channel 3, but a lower frequency than Channel 5.

Then along came cable TV. Cable inherited this legacy even though it was not bound by broadcast frequencies. Consumers needed to know that if they subscribed to cable, they would still get “Channel 2” and “Channel 7”; plus they get new cable networks, which get their own arbitrary numbers. Why train people on a whole new system, right? Cable is just like “regular” TV, except with lots more of it. Also, it’s easier to show a number on the front of the cable box than the name of the channel. So the numbering model was still useful. Also cable companies could use “low” numbers as negotiating leverage with networks.

This model doesn’t hold for newer forms of content. The Huffington Post isn’t “Website number 802.” Of course it isn’t, trying to number websites would be superfluous and silly. You don’t have to navigate up and down through a list of websites, you just type in what you want based on its descriptive name and go there. Your only hurdle perhaps is remembering which vowels to leave out of the domain name.

A slow revolution of sorts is beginning to take hold that will finally challenge the numbered-TV model. You may have heard this referred to as “cord-cutting” or as the adoption of “over-the-top” entertainment options. This trend doesn’t just mean people cancelling cable and watching Netflix instead. It challenges the 70-year old definition of what a “network” is and the 7-year old definition of what an “app” is (vis a vis entertainment).

Right now, we have cable networks with linear programming, and we have apps with supplementary content about those cable networks. The logical conclusion of the current trends is that the two merge; the linear TV broadcast of live events plus on-demand shows all live within an app that is ad supported (e.g. Hulu), subscription based (e.g. something like HBO Go or Netflix), or some sort of hybrid. Imagine a world where you subscribe to “cable”, but instead of 500 numbered channels, you get access to 500 apps. Each app then has the “TV network” as you used to know it embedded within, plus supplementary functionality. So your ESPN app includes live coverage plus a widget showing scores, and your History Channel app shows an interactive map with the best routes for ice road trucking along with the show of the same name.

You don’t necessarily lose the ability to bundle content the way cable does currently. For instance, a parent company with many cable networks could bundle them together into one subscription app, effectively becoming a mini-cable company unto itself. A user could then rebuild an approximation of current-day cable service by subscribing to some of these bundles. The idea of assigning numbers to these apps at that point would seem completely ridiculous. As content providers shift to this model, so begins the decline of the channel number. The last vestige will be over-the-air broadcasts; but even then any remotely modern digital tuner can list channels by name rather than number with a simple firmware upgrade.

And what would that then mean for advertising? Well it’s a whole new world entirely. The 30 & 60 second spot formats will erode in favor of a new set of standards for video and interactive ads. These new standard units will be distributed across a vast sea of platforms, apps and experiences in real time based on programmatic algorithms. In parallel there will be enormous opportunities for specialized sponsorships, apps, games and experiences that can rise above the clutter and integrate themselves with audiences’ lives. If you need help imagining what this future will be like, just take a look at where digital display advertising has been headed.

We’re already reading about Apple skipping the cable companies and going directly to content providers for their long-awaited TV offering. Netflix original programming is another example of what this future may look like (albeit without ads). The more Netflix produces original programming, the more they begin to resemble what we currently think of as a TV network. Except they never had a channel number, and never needed one, and never will.

Are All Screens Created Equal? : A Research Study by the IPG Media Lab

IPG Media Lab and YuMe partnered on a media trial to answer the following questions:

1) Does device/screen have an impact on the effectiveness of video ads?

2) Do other variables play a role in video ad effectiveness?

– Ad clutter

– Creative quality

– Type of video content

– Location of consumption

Download the full report here: Yume Presentation 7_25_12

The Eyeballs Will Be Monetized

Much has been made of Google’s newly-awarded Pay-per-Gaze patent for a mysterious “head mounted gaze tracking device which captures external scenes viewed by a user wearing the head mounted device,” (I wonder what they can be referring to), which would monitor the pupils of those wearing the device to infer emotion and track what ads they are looking at.

If it’s not too wild a presumption to think they are referring to Google Glass – and while there are certainly a few technicalities they need to work out first – it seems to be the obvious delivery mechanism for the patented technology.

At the Lab, we’ve been in the business of “monetizing eyeballs” for years using eye-tracking technology and other attention and emotion-detecting technologies to benchmark the ad effectiveness. With the prevalence of webcams, and the advanced sophistication of biometric software in the past year, we’ve been able to amass sample sizes in the thousands in our research studies.

Being able to do this with Glass, and gauge consumer sentiment to stimuli out in the real world, is an extremely exciting proposition for research.

Considering how invasive it is, the key is to have consumers opt in, and have a pretty good incentive to do so. We imagine just a sample of the population would participate, as in a large scale research study or Nielsen panel. Or perhaps, consumers at large will be paid to have their personal data tracked, possibly paving the way towards a data economy where consumers receive micro-payments in exchange for sharing personal data, as envisioned by Jaron Lanier in his book “Who Owns The Future?”

For more information, contact [email protected].

On the Brains and Brawn of Google Glass

As Computerworld cited this week, Google Glass will not be available to consumers until 2014. This was something we forecast in the Lab’s 2013 Outlook as one of the life-changing technologies which would remain on the horizon for the time being.

The article cites consumer comfort levels with Glass as a reason for the ‘delayed’ launch, but as we said at the start of the year, perhaps bigger hurdles (by no means not the only ones) might be cost and battery power.

Back in December, Glass engineer Babak Parviz said “[Battery power is] a valid concern. We have done a lot of work in this area, and it is still a work in progress. Our hope is that the battery life would be sufficient for the whole day. That’s our target. So you would put the device on in the morning and you’d go about your daily routine. By the time you got back home, the device would still be functioning.”

Having tested Glass at the Lab, we’d be lucky if the battery lasted 2 hours, so there is a long way to go in that respect.

At the Lab, we imagine a future where consumers will be wearing their tech… The smartphone has changed many-a-consumer’s lives practically overnight. But because of its cumbersome form factor (it’s all relative…), we believe it represents a bridging technology to a world where tech will retreat into the background – as Pranav Mistry elegantly puts it in his 2009 Ted Talk, to “help us to stay human and stay more connected to our physical world.”

The smartphone will, however, continue to have a major role in this future – not as the interface that consumers interact with, but as the ‘brains and brawn’ behind our peripheral interfaces. A great example of this is Kickstarter darling, the Pebble Watch, which uses Bluetooth to harness the functionality and processing power of a smartphone to display its notifications.

Looking into our crystal ball, we imagine that within two iterations, Glass will also be powered by the smartphone. This makes sense since the phone is both a powerful, and a subsidized product. This will start to help solve two of Glass’s biggest issues: battery life and expensive guts.

Flash POV: Viacom’s Deal with Sony: Are They Hedging Their Bets?

Although neither company has made any official statements, news reached the press late last week that Viacom and Sony had come to a tentative agreement for streaming the former’s networks on a yet-to-be-released Sony video platform. Details are sparse, as the two companies are presumably still working out the finer points of the deal, but here is what we know thus far:

+ The service would feature live feeds of the Viacom networks, the same as any MVPD would get

+ It will be initially available on connected Playstations and potentially Sony Smart TVs

The new streaming service would be competitive with similar products in the works from Google and Intel, but if the deal holds, it will be the first of the three to have secured live TV feeds. With content owners up in arms about Aereo’s live TV service and MVPDs facing increased competition from over-the-top solutions, this type of agreement seems counter to the industry’s interest in protecting the existing TV model. So why do it? We can only speculate at this point, but here are two potential reasons:

+ Viacom doesn’t have corporate ties to any particular multichannel provider, and has been notoriously aggressive in its negotiations them. We seriously doubt NBC Universal (Comcast) or the Turner Networks (Time Warner Cable) would be part of this kind of deal unless it was somehow synergistic. With multichannel subscriptions set to peak this year (see the latest Media Economy Report), perhaps Viacom is laying the groundwork to make sure its channels are viewable in a broadband-delivered video world.

+ Theoretically, the live feeds streamed via Sony’s service would include only the national ads. Since multichannel providers typically have some local ad time to sell every hour, Sony could possibly generate some ad revenue by filling in those gaps.

We estimate that there are currently about 22 million connected Playstations in the US today, which will give Sony’s new video service a relatively small footprint to start with. There will have to be subscription fees to finance the carriage of Viacom’s networks, which will further limit uptake. All in all, we don’t expect it to have any meaningful impact on the marketplace, especially since the user experience won’t be a la carte or on demand; it will be the same as receiving the networks via cable.

However, it does bring a familiar issue to the forefront, one we have been citing for some time now. The MVPDs control most of the broadband connections in the country. As consumers eschew traditional cable subscriptions for broadband video delivery, those companies will naturally charge more and more for internet access to make up for those losses. This was confirmed by former Time Warner Cable CEO Glenn Britt, who was quoted in a New York Times article as saying: ”The reality is, if everybody watched TV over the Internet, and we were out of the TV business, then we would have to recover more money from the Internet service.”

At that point, the question becomes: is it really “cord-cutting?” Or just “cord switching?”

For more information, contact [email protected] or [email protected].

Flash POV: The Next Level of Targeting: Anticipatory Computing

In March, we predicted that 26 percent of online display ads would be bought programmatically this year, and that it would climb to 50 percent by 2017. And that’s just a single medium.

In addition to being data-driven and efficient, one of the benefits of a programmatic approach to buying ad space is the ability to get your message in front of more receptive audiences. A research study conducted by our colleagues at the IPG Media Lab and AOL demonstrated that real-time ads consistently generated more interaction. The idea of targeting in real- time is to create a mutually beneficial scenario for the brand and consumer—you’re offering something they need. But what if you could anticipate those needs before they even arose?

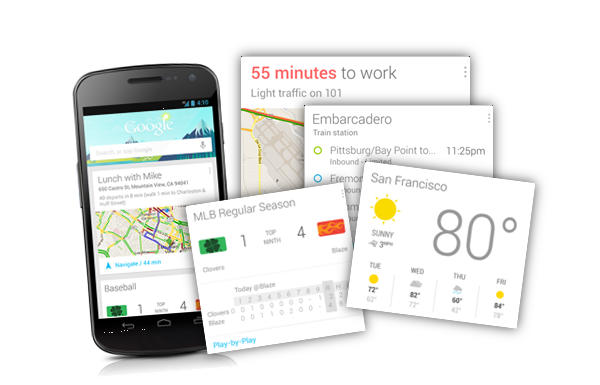

That is the promise of anticipatory computing, which, rather than waiting for the user to make a query, uses passively collected data streams like sound and location to proactively provide information. The Lab staff have cited this as a trend to watch this year, and were recently approached by research firm Forrester to provide input to for a study on the topic. One example of software that uses this technology is Google Now, which serves up virtual “cards” throughout the day to keep you updated on topics of interest, travel information, and calendar events without prompting.

While this is still a new space for advertisers, it has been used successfully. Earlier this year, Kleenex used a combination of Google search and public health data in the UK to anticipate which regions and cities would experience flu outbreaks, and adjusted their media plan accordingly. The result was a 40 percent increase in tissue sales year-to-year (read more about it here).

If the goal of real-time marketing is to be relevant and helpful, anticipatory computing represents a way to go one step further. Personal data will increasingly be used by brands to target consumers with information that they will find valuable precisely when they need it, and it won’t be long before they expect nothing less.

For more information, contact [email protected] or [email protected].

Stratasys Acquires Makerbot

At the end of June, MakerBot and Stratasys confirmed what many already knew: that MakerBot would be bought for $403 million. Today, that deal is official, and it means that Stratasys now has a non-industrial, consumer-facing 3D printing arm. And there’s a certifiable demand for this industry, as MakerBot, FormLabs, and others have shown. MakerBot was one of the first to offer affordable, in-home 3D printing, and the merger now means that there is a full-service company within the Industry that offers enterprise and at-home products. And with 25+ years of experience from the Stratasys team, MakerBot is sure to elevate its printing game.